Over the last few years I have been volunteering at a couple of autonomous robotics competitions for sixthform students. One called student robotics which runs over six months and a one week spinoff summer school called sourcebots. In these events the student teams design and build a fully autonomous robot to compete in a game, unlike many of the robotics competitions seen on television these are not robot battles but are instead usually based on moving computer vision marker labeled tokens into scoring areas in the arena.

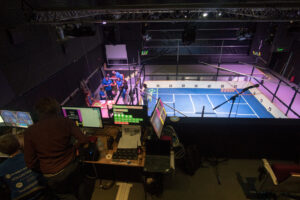

Both of these events culminate in a competition where the robots compete against each other in a series of league matches and then a set of knockout matches. The competition event is very fast paced with matches lasting a couple of minutes and similarly sized match gaps to reset the arena and get the next set of robots in, meaning keeping to the schedule is critical. The competition is also very much a spectator event with many teams bringing friends and family along to watch. In order to make the competition event run smoothly and maintain a high production value there is a lot of tech behind the scenes which has been iterated upon over the years we have been running it. The video below is from the livestream and gives a good sense of the event for those who haven’t seen one in person.

Logistics

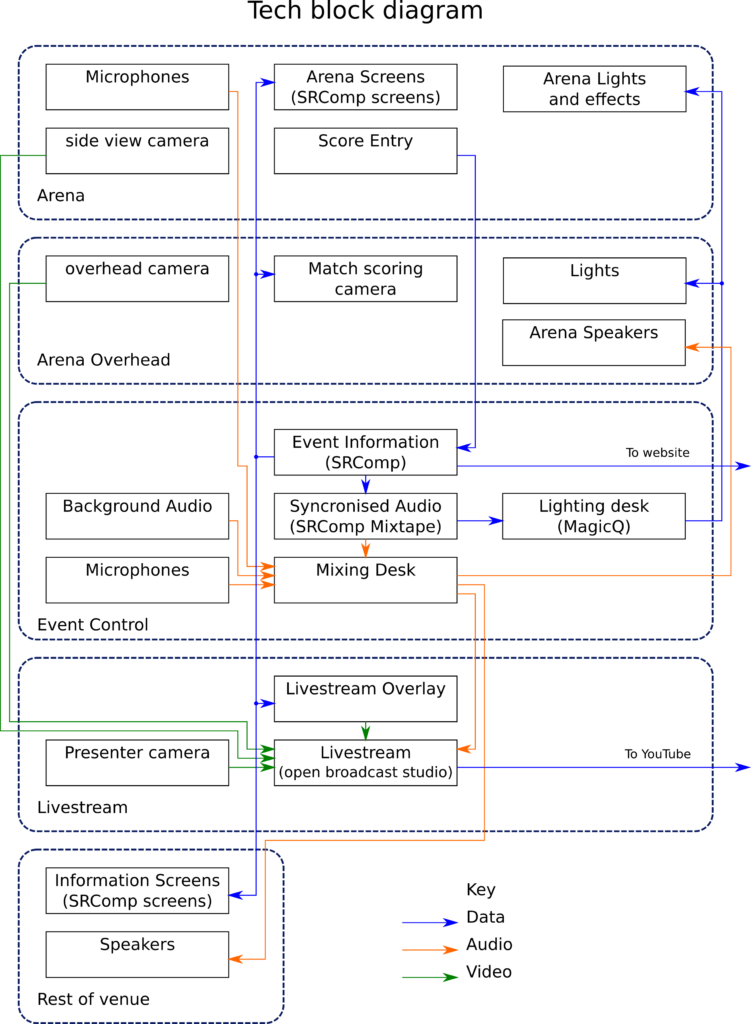

The heart of the system is a piece of software called SRComp which was written to run the competition. SRComp contains a version controlled compstate, this includes the event schedule, details on teams, what teams are in each match, and scores. Edits to the compstate e.g. rescheduling a match or recording scores are pushed to SRComp which will validate the new state and then inform other systems of the update. To connect the other systems, SRComp provides both an API and an event stream which informs of upcoming matches and any compstate updates. Systems connecting to SRComp take the event stream and API information to populate their internal state information, systems then use their own clocks to trigger the events at the appropriate times which avoids any timing issues due to network packet loss. On the same box as SRComp is a local NTP server, this ensures everything maintains a synchronised time throughout the competition as even a small amount of clock drift between the arena systems can significantly degrade the aesthetic and generates confusion if there are larger timing differences.

The score entry system is used to record the match state at the end of each match and adds it to the compstate, SRComp then calculates the match and league points accordingly. As well as the scores recorded by the match marshals a new feature we added this year is a raspberry pi and camera located in the lighting truss which is set to take photos of the arena at the start and end of every match, this provides a useful reference in the event of scoring mismatches between the marshals or team queries on scores.

There are team pits spread around the venue where the students can work on their robots when not competing and we provide information screens to keep teams informed of the schedule, match scores, and league rankings throughout the event. This is done using the SRComp screens webpages, these use the SRComp API and event stream to populate an information page which is displayed on a full screen web browser on a pi attached to every screen. As well as the information screens in the pits the SRComp screens system is also used for the other displays in the venue. The most noticeable of these are the displays on the arena for spectators, these screens show which teams are in which starting area and display match timings.

Behind the scenes the SRComp screens also provide information for the shepherding and staging teams. The shepherding team see a list of which teams are up next and which area of the building they are in, allowing the shepherding volunteer for those areas to summon teams to the arena (as otherwise teams could miss matches). When at the arena the robot staging team uses the staging info to add the corner markers to the correct robots and to avoid teams entering the wrong matches.

The information from SRComp is also used on the main website during the competition, this runs its own instance of SRComp which the local dataset is pushed up to when changed. In addition to the screens and other dynamic systems we also have scripts that use the API in various ways such as generating printed schedules and shepherding info or producing the list of awards for the prizegiving.

Theatrics

With the logistics covered it’s time to get onto the exciting features such as the sound and lighting.

The arena lighting serves several functions, the most important is to give a good wash over the arena which allows for the computer vision on the robots to better detect the markers on the tokens. Another function is to increase the event atmosphere by adding additional interest to the arena outside of matches. The majority of the lighting hardware is located on the suspended truss above the arena, most of the fixtures used are moving heads. In recent years this has largely consisted of 8 Chauvet Rogue R1 beams, 8 Chauvet Rogue R2 washes and 8 Chauvet Intimidator LED350 spots. In this years rig we also used an extra 4 Chauvet Rogue R1 beams, 6 Chauvet Rogue R2 spots, 8 Source 4 ETC junior profiles and 3 Source 4 ETC zoom profiles. Additional lighting is often also added to the arena itself depending on the theming of the game (often LEDJ 7Q5 rgb parcans).

The arena lighting is driven by a computer running the chamsys MagicQ lighting software with a Maxi Wing attached. The lighting desk is programmed with queues which go through the appropriate match states and transitions and control the lights accordingly. MagicQ supports remote control over IP networks which allows us to trigger it based on the SRComp information. The triggering is done by SRComp mixtape, this software’s main purpose is the delivery of the synchronised audio which I will cover later. (For those wondering why the lighting control triggering is in our music playback software, the first prototype of the automatic lighting used beeps played into the audio input and used “audio bumps go” for triggering as we had the venues non networked MagicQ console for that summer school. ) Mixtape uses the SRComp event stream and triggers actions at given times before or after the start of a match. In the case of the lighting, the action is to send “Chamsys remote ethernet protocol” packets to the lighting machine. At the end of the final match the same system is also used to trigger the firing of four confetti cannons on the corners of the arena. During the prizegiving after the matches the lighting console is manually operated.

The event uses several audio feeds which are delivered to various areas of the venue as required. Most of the audio inputs are fairly standard. We use several wireless and wired microphones for the match commentators which are played in the arena area, and a separate wireless and wired mic for venue announcements. For the majority of the event we use a non synchronised music playlist which just runs from a standalone computer.

When we get into the knockouts, we switch to a synchronised playlist with the drops in the music precisely timed to the starts and ends of matches (this cannot be heard on the livestream video above due to licencing restrictions). The music is controlled by SRComp mixtape which stores the playlist and triggers the correct songs at the correct time. In order to get the timings correct the system needs to play from wav files as the variable latency caused by decompressing mp3 or flac files would otherwise cause the audio to be out of sync. In addition we calibrate the system for the latency of the computers audio interface and that of the venues sound system. As well as the playlist, mixtape also handles the end of match sound effects which are played for all the matches and triggering lighting transitions as discussed earlier. All the audio inputs are fed into a central mixing desk that mixes the feeds for the arena, team pit areas and for the livestream.

As well as the spectators in the venue the competitors often have many friends and family that want to watch from home so we provide a livestream. Historically this has been a webcam feed of the arena with the commentary overlaid, however, in the last couple of events we have expanded the scope of the livestream to what you can see in the video above.

The core of the livestream is the Open Broadcaster Software (OBS) often used for livestreaming computer games. We used a pair of gopro cameras to give a side and a topdown arena view, fed through elgato HD60S USB hdmi capture cards to get them into OBS. The topdown camera was also split to provide feeds to screens near the lighting and judging desks providing extra arena visibility as they were unable to easily see the near side of the arena from the balcony and lighting booth. For the presenter view we used one of the webcams we also provide in the team kits. The livestream overlay is a webpage which is opened in a web browser internal to OBS giving us the ability to have a transparent background through which the video is seen. The livestream overlay webpage connects to the SRComp API and event feed enabling it to change state without input from the presenters. During the first day of the event we arranged photos of all the robots which when submitted to SRComp allowed the livestream overlay (and arena screens) to show robot photos in the prematch info view. For the audio the livestream presenters have a small mixing desk which allows them to mix their mic and the commentary feed from the arena which comes from the main mixing desk as they require. Control of the stream is handled by a pair of macro keypads (Elgato streamdecks) with buttons mapped to the available scenes and media choices available to the livestream presenters. Despite the feature set, the entire livestream setup was able to fit on an intel nuc computer making it very portable (excluding the 3 displays that it used).

Conclusion

Hopefully this look behind the scenes has been interesting. As with any event like this, the tech can only get you so far and the volunteers that help at the event are the true key to its success. If your interested in helping out, we are always open to new volunteers.